Four RCC staff were fortunate to attend this year’s Supercomputing Conference (SC23) in Denver, Colorado from 12–17 November.

RCC Director Professor David Abramson, Chief Technology Officer Jake Carroll, eResearch Analyst Dr Marlies Hankel, and Research Computing Systems Engineer Owen Powell have each written reports reflecting on their highlights from the world’s largest supercomputing conference.

This was the first time Marlies and Owen attended the conference, and as Marlies said, it “pretty much blew my mind.”

Links to the four reports:

- RCC Director Professor David Abramson

- RCC CTO Jake Carroll

- RCC eResearch Analyst Dr Marlies Hankel

- RCC Research Computing Systems Engineer Owen Powell

SC23: From inspiring, challenging to head-splitting talks

By RCC Director Professor David Abramson

The first thing to note is that SC seems to be pretty much back to pre-COVID attendance levels, with a vibrant conference and show floor.

As usual, there is way too much going on to attend everything, but the following contains a few highlights from me.

The conference kicked off with a stunning keynote address from Dr Hakeem Oluseyi, an astrophysicist, former Space Science Education Lead for NASA, and an inspirational speaker.

Dr Oluseyi spoke with passion about his childhood, early years, and the somewhat unconventional path he has followed to his current position. He was born into a world of disadvantage, racism, crime and drugs, but managed to grasp the opportunities that came his way. It was a truly inspirational talk about rising above adversity, being knocked back again and again, and finally succeeding.

He spoke about the mentors he met along the way, and how they helped him achieve even when he didn’t believe in himself. Almost everyone I spoke to after the talk was awestruck by just how much he has achieved, and the passion with which he is giving back to encourage others to enter into a career in STEM (science, technology, engineering and mathematics).

As panels vice-chair for SC24, I made sure I got to as many panels as I could this year. These included one on “Carbon-Neutrality, Sustainability, and HPC”, with speakers Daniel Reed (University of Utah), Andrew Chien (University of Chicago), Robert Bunger (Schneider Electric), Genna Waldvogel (Los Alamos National Laboratory (LANL)), Nicolas Dubé (Hewlett Packard Enterprise (HPE)), and Esa Heiskanen (Finnish IT Center for Science).

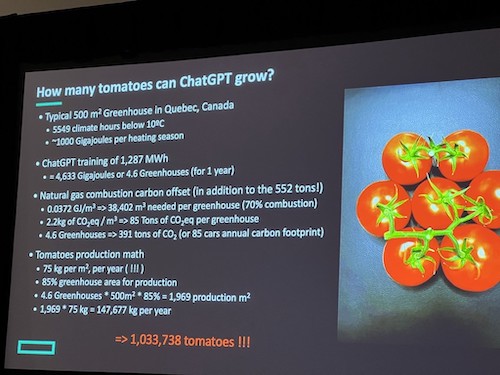

Perhaps the most memorable slide presented in this panel, shown below, looked at the energy requirements in training ChatGPT in equivalent units of tomato production!

Perhaps the most memorable slide presented in this panel, shown below, looked at the energy requirements in training ChatGPT in equivalent units of tomato production!

I also attended a few events on quantum computing, including a panel titled “Quantum Computing and HPC: Opportunities and Challenges for New Companies in the Field of HPC”, with Martin Schulz (Technical University Munich, Computer Architecture and Parallel Systems, Leibniz Supercomputing Centre); Santosh Kumar Radha (Agnostiq); Jan Goetz (IQM); Andrew Ochoa (Strangeworks); Tommaso Macrì (QuEra); and Michael Marthaler (HQS Quantum Simulations).

Fred Chong (University of Chicago, Infleqtion) gave a head-splitting talk entitled “Physics-Aware, Full-Stack Quantum Software Optimizations”. I think I got about halfway through this one before my headache started!

Amanda Randles (Duke University) gave a great talk entitled “Unlocking Potential: The Role of HPC in Computational Medicine”, and spoke of her work to build a vascular digital twin. Her work uses sensor data and advanced computational models to support in-silico vascular studies.

A somewhat robust panel on “HPC and Cloud Converged Computing: Merging Infrastructures and Communities” with Daniel Milroy (Lawrence Livermore National Laboratory); Michela Taufer (University of Tennessee, University of Delaware); Seetharami Seelam (IBM TJ Watson Research Center); Bill Magro (Google); Heidi Poxon (Amazon Web Services); and Todd Gamblin (Lawrence Livermore National Laboratory), discussed the issue of HPC and cloud computing. I am not sure they reached a conclusion, but it was a fascinating discussion mirroring some of the ones we have had in the Australian educational sector comparing on-premises HPC with commercial cloud offerings.

I also attended a panel entitled “The Impact of Exascale and the Exascale Computing Project on Industry” with Frances Hill (US Department of Defense), David Martin (Argonne National Laboratory (ANL)), Peter Bradley (Raytheon Technologies), Rick Arthur (GE Aerospace Research), Roelof Groenewald (TAE Technologies Inc), and Mike Townsley (ExxonMobil), which nicely summarised the role that exascale machines play in real-world industrial problems.

I was also privileged to hear award talks by my colleague and good friend Manish Parashar (Scientific Computing and Imaging (SCI) Institute, and University of Utah) who won the 2023 Sidney Fernbach Award. His talk entitled “Everywhere and Nowhere: Envisioning a Computing Continuum for Science” was a delightful discussion about applications of advanced computer science to key research problems.

I was also privileged to hear award talks by my colleague and good friend Manish Parashar (Scientific Computing and Imaging (SCI) Institute, and University of Utah) who won the 2023 Sidney Fernbach Award. His talk entitled “Everywhere and Nowhere: Envisioning a Computing Continuum for Science” was a delightful discussion about applications of advanced computer science to key research problems.

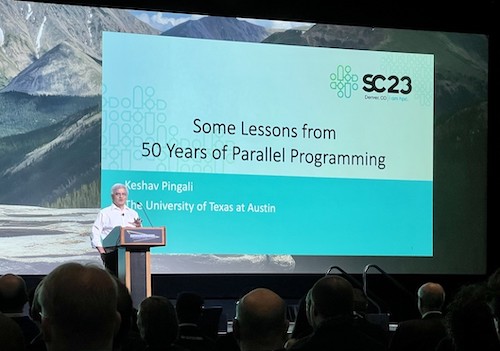

Keshav Pingali (University of Texas), who won the 2023 Ken Kennedy Award, gave a fascinating talk on “Some Lessons from 50 Years of Parallel Programming”; perhaps sobering that I recall many of the approaches and technologies he discussed!

Keshav Pingali (University of Texas), who won the 2023 Ken Kennedy Award, gave a fascinating talk on “Some Lessons from 50 Years of Parallel Programming”; perhaps sobering that I recall many of the approaches and technologies he discussed!

Overall trends and observations from SC23

By RCC Chief Technology Officer Jake Carroll

I try to take a different approach to SC each year I attend. In my seventh year attending SC, I wanted to engage more with research papers and workshops. I was rewarded with new perspectives, ideas and opportunities to bring home to UQ.

The network is once again the computer

One had only to glance at the heart of the SC show floor to notice the $20m US dollars' worth of network infrastructure powering “SCiNET” to understand the importance of a healthy data network technology industry.

In supercomputing, Ethernet networking technologies have traditionally been considered a compromise.

Currently, when compared to the market leader (Nvidia’s InfiniBand) Ethernet is generally: high in latency, exhibits large protocol overheads, has unsophisticated topological awareness and lacks adaptive routing.

Due to these factors, the standard Ethernet networking infrastructure of today is generally not fit for purpose in advanced, scientific, research and large-scale computing.

This deficiency has primarily been recognised by the hyperscale public cloud providers who can no longer run their data centres using standard Ethernet for all the above reasons.

I observe the industry trying to fix this however, in the form of the Ultra Ethernet Consortium (UEC). With companies such as AMD, Microsoft, Arista, Broadcom, Cisco, Intel, Oracle, HPE and Meta party to this new breed of Ethernet-based supercomputing interconnect, there is a real chance we may soon see some direct competition to Nvidia’s InfiniBand.

Those building chips shall inherit the workloads

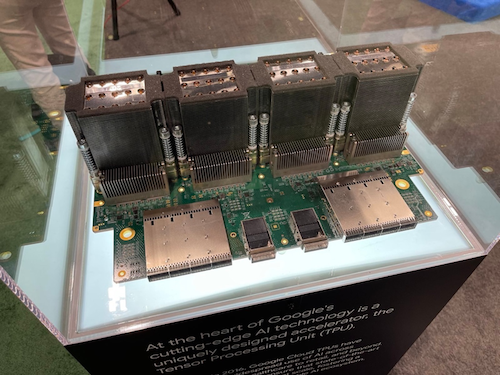

For companies large and profitable enough to invest in their own designs, custom silicon has become common. Google’s TPUv5e, Microsoft’s Azure Cobolt/Maia, Samba Nova Systems SN40L, Cerebras CS-2 and several others are producing dedicated AI and workload specific chips.

They are doing this for a few reasons. The most obvious reason is that when designing a cloud at hyperscale, controlling every aspect of the code your cloud runs, with the exact instructions, performance per watt and features you need means you can deliver a more competitive, potentially more cost-effective service to your clients. You might gain some competitive advantage in that process, too.

The less obvious reasons are contingency, supply chain diversification and risk management. With so much of the world relying on TSMC (Taiwan Semiconductor Manufacturing Company) right now, the complexity and the geo-political impact that the CHIPS Act of 2022 has created and the unusual circumstances some fabrication companies find themselves in due to their physical location — diversity is important.

For supercomputing, it potentially creates opportunities. Our processor technologies are once again turning to specificity rather than generalisation. Chiplet technology, as found in the AMD CPUs inside UQ’s Bunya supercomputer, is making this specialisation and customisation increasingly obtainable.

We may see an era of co-processors being built that are targeted and specific to the workloads we want, as opposed to CPUs that are designed to do many things relatively well, but not particularly efficiently.

Whether the increasingly diverse range of specialised co-processor technologies will be sold to us and are something we are allowed to own, as opposed to the technologies we can currently only rent from public cloud providers, is another matter. Examples of this ‘rent only’ technology include Google Cloud’s TPU and AWS’s Graviton.

Storage: Keeping it cooler

There was less commentary this year about who could build the fastest parallel filesystem and more discussion about how we’re going to maintain the data holdings of our world sustainably.

The quest for more throughput is still there but there was a more important discussion in the Future of Storage Workshop about energy efficiency.

We discussed the approaches we should be adopting to keep our data where it needs to be, based upon access requirements, as opposed to putting it in the fastest, most power inefficient place we can. This isn’t novel thinking. It is a practice that many large scientific research sites (such as UQ) have had in place for decades.

The difference now is that the public cloud providers and the on-premises centres need to navigate how to work transparently between each other whilst satisfying client needs.

The issue of network bandwidth kept coming up in the discussions. Not all sites have enough network bandwidth to make the movement of data between on-premises computing infrastructures and the public cloud seamless or even usable.

Storage density announcements from hard disk manufacturers such as Seagate and Western Digital see 24 to 32TB capacities achieved with various techniques such as energy assisted perpendicular magnetic recording ePMR, whilst IBM has shown its 50TB TS1170 “JF” magnetic tape media technology. Various senior industry commentators and observers predict NAND-flash memory will experience a cost increase shortly, due to global demand issues.

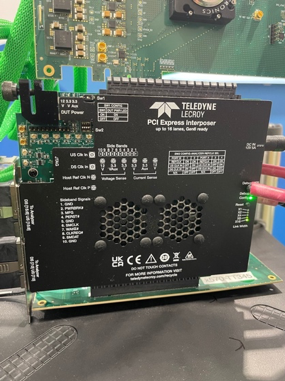

In the Composable Systems workshop, the CXL Interconnect coupled with the PCI-E standard were demonstrated with real world large memory and process sharing workloads. This is an exciting development, as the industry progresses further towards disaggregated data centres and modular building blocks for system components, as opposed to entire systems having to exist inside a node. A clear example might be a situation where a researcher needs a node that contains only 48 CPUs but requires 20TB of main memory (RAM) attached to it. This ordinarily would not be possible, but CXL.mem pools would mean that HPC administrators could simply add a “memory module canister” and dynamically attach that memory to a set of CPUs on the other side of the data centre, as if it were inside that node.

Thermal Design Points go up. Liquid cooling needs a re-think

The Thermal Design Point (TDP) projections of CPUs and GPUs are once again beyond the predictions from the previous year.

Where 700 to 1000W was the maximum design point explained by many chip vendors in 2022, we are now being informed that most of the advanced GPUs will reach around 1250 to 1400W in the 2024 timeframe.

This has created a pause for thought with many of the liquid cooling vendors, designers and the systems integration companies.

Where a certain rate of flow across the liquid pad was acceptable a year ago, it will shortly be inadequate.

If a system was set up to cool at a specific TDP a year ago, there is a significant likelihood that the next generation of CPUs and GPUs that will replace it will require a whole new cooling system, flow rates and diameter of pipe to dissipate heat adequately.

Full immersion cooling options are becoming more visible. There are more companies entering this market, but these solutions still have issues of ease of installation and the cost profile of other solutions.

Novel technologies such as reverse vacuum liquid cooling were demonstrated on the show floor, promising dripless, low-risk installations and new ways to ensure the safety of water near sensitive electrical equipment.

Quantum computing: Still formative

One of several invited speaker presentations I attended this year focused on quantum computing. I came away with the opinion that quantum computing still feels formative and has a great deal of room for growth before it proves practical to researchers in basic science. Developers of quantum systems continue to vie for the dominant market position with a confusing array of technologies being proposed, including:

- Quantum circuit systems

- Adiabatic quantum systems

- Superconducting quantum systems

- Photonic quantum systems

- Neutral atoms quantum systems.

It is unclear and unknowable at this stage which type of quantum system will go on to be the ubiquitously adopted technology that takes quantum mainstream. Some of the expert panel members suggested photonic and laser-based quantum systems held the most chance for mass adoption.

Coherence time and the ability to sustain a quantum state for a long enough time to achieve anything computationally significant kept being referenced, as did the inability to manipulate large data sets in quantum systems, as they currently stand. To this end, the practical scientific problem-solving potential of quantum systems seems limited at this point.

The continual rise of SHPC (Cybersecurity in High Performance Computing)

The second annual Workshop on Cybersecurity in High Performance Computing had more than 400 people present — a fourfold increase on the previous year.

The topics were both deeply technical (code correctness, exploit and threat hunting) and abstract (people, culture and processes).

An important takeaway for most attendees was that we must invent new cybersecurity techniques, tools and principles to fit our domain, technologies and use-cases.

When compared to enterprise ICT applications, there are aspects of HPC applications that operate differently in terms of privileges, performance and access expectations.

The workshop exemplified that we could build upon our foundations in innovation and problem solving to recast what traditional cybersecurity tools, techniques and principles need to be for our community.

Culture, inclusivity and diversity

SC is a worldly and uniquely inclusive conference. Almost every corner of Earth is represented. This year some of the best talks explained the journey people have taken to get into supercomputing. The talks made me sit with my own journey in research computing, my own history and my ancestry, reflecting on how I came to be here, as well as what each of our differences and unique perspectives allows us to contribute.

The quality of the talks, especially that of Dr Hakeem Oluseyi – globally renowned astrophysicist and scientific educator — gave us all something to reflect on. The theme of the conference this year was “I am HPC.” Indeed, I am HPC. We are HPC – and our culture, our shared vision and the reason we come to work every day and do what we do was never more evident when we got together with about 14,000 of our friends in Denver for Supercomputing 2023.

SC23 “pretty much blew my mind”

By Dr Marlies Hankel, RCC/QCIF eResearch Analyst

This was my first time at the Supercomputing Conference. I have attended many research conferences during my 20-year research career, some quite large with many thousand attendees and multiple streams, so I was curious how this would compare.

One of the first differences was there was official merchandise that one could purchase. T-shirts, hoodies, mugs, soft toys, and more. Sure, you still got the free merchandise at registration but there was so much more.

Once we had sorted out our check-in and bought some SC23 merchandise it was time to get to work. It started with two days of workshops. There was plenty to choose from and it was good that one could swap and move between the different workshops and streams easily. This was despite the size of the conference, and most could be walked to within a few minutes. I come from research and I am not trained as a technical person, so I went for the workshops on user and software support, and training.

It was great to see all the support and available features for Open OnDemand (OOD) which provides users with a web interface to easily launch a session on a HPC compute node with a graphical user interface desktop. These enable compute and visualisation and avoid having to use the command line in many cases. RCC has OOD available in a beta version for testing and this showed what is possible in terms of making high-performance computing easier for our users.

Another workshop went through the pitfalls and often hard work that is needed to port a code to multiple architectures, mainly different GPU architectures. This gave me several starting points for reading and material to include in our User Guide to help users to make more efficient use of the different available GPU architectures on Bunya.

A big surprise was that even large computer centres were still grappling with the onboarding of less traditional HPC domains and the way to offer useful training that is scalable. It seems we are all in the same steep learning curve.

At this stage, SC23 was like many other conferences I have been to. I found many doing the same thing and trying to get the same thing working as I, and reaffirming what I was doing while providing many new ideas and possible solutions.

At the end of the second day of workshops, SC23 showed a completely different face and pretty much blew my mind. After two days of workshops and read talks, the show floor officially opened. This was when the masses turned up, about 14,000 attendees, and I realised this was so much bigger than any conference I have ever experienced.

There were those you would expect on the show floor, such as Intel, AWS, and Dell, but I also found many European HPC centres (I am from Germany and have lived and worked in the UK), and many other hardware and more importantly software providers. I cannot deny that I totally embraced the “kid in the candy store” feeling and sniffed out all the merchandise (soft toys and T-shirts) but this hunt guided me past many vendors I would have missed otherwise.

Most interesting was Open OnDemand (no surprise really as I was primed from the workshops I had attended). I found out more about what help we can find to make new features available for our users. Talking to the team from Globus we found that Globus integration is coming to OOD. Talking to the team at Mathworks we found that MATLAB integration is also available.

The Intel and AMD teams showed me which software they are supporting and porting to their new GPU architectures and I was happy to see that many of the ones we have on Bunya will be supported.

The show floor was open for three days and I really needed that to take it all in and have time to go back to some vendors and ask more questions I had thought about.

The last day of SC23, after the show floor was closed, it was back to workshops once more. I learnt more about how to best deliver documentation and training materials to users and how to design communication. I have a lot to learn there and to streamline some of what we are doing.

SC is a very technical and hardware focused conference. Those, like me, who have come from research and work mostly with users might not immediately see how this conference could be of benefit to us and how we could contribute. But there was so much material on user support, software support, training and communication that I had to pick and choose, and I wish I would have had time to go to more talks.

It was a great conference and I am grateful that I was able to go and experience the show that is SC.

SC23: Boffins, sidebars and pinch-me moments

By Owen Powell, RCC Research Computing Systems Engineer

It was a privilege and pleasure to be able to attend SC23 this year alongside David, Jake and Marlies. I had to pinch myself when flying into Denver over the snowcapped Rockies.

A day spent in the sun checking out the botanical gardens, which already had a winter garden light display, helped me adjust to the time difference and set me up well for the whirlwind week ahead.

Having the chance to attend SC23’s workshops was great. There were well-considered presentations and discussions by researchers, support staff and technical boffins alike.

My first was a workshop for systems professionals and I left with new ideas about improvements that can be made to and some reassurance that we’re in a good place with our HPC clusters Bunya and Wiener to build out our capability.

There has been a lot of effort put in to providing reporting on resource and power utilisation for jobs run on HPC clusters and making those insights available to researchers so that they can make informed decisions about how best to request resources.

Another workshop on containers in HPC opened my eyes to several new technologies that deserve further investigation, such as Charliecloud.

There was a theme of inclusivity and diversity that ran through several workshops, the plenary and the keynote, which was given by Dr Hakeem Oluseyi who gave a candid and humbling account of his unlikely trajectory to academia. It was a fantastic talk and one I will not forget.

The week was accented by sidebars with hardware and software vendors, providing an opportunity to get tailored insights into what’s on the horizon and discuss what UQ needs to get the best outcomes for our researchers.

I was invited to meetings with engineers from Intel, AMD, HPE, Dell, and NVIDIA. I got some insight into future directions for hardware that will help to inform decisions as we consider our options over the next couple of years for building out Bunya’s hardware.

On the software side, we met one of the developers behind Open OnDemand and heard about the many ways that it is being used around the world, as well as what’s being worked on for the next release.

The developers who work on the IBM storage software that drives both Bunya’s scratch file system and our HPC-connected UQRDM (Research Data Manager) collections were also at SC23. I have worked some long and odd hours with them over the years and it was great to be able to meet some of them in person for the first time and talk about some of the wonderful science that’s being done with the platform as well as some of the challenges we face as we continue to scale those services out.

I also met with the developers of the HPE storage software that protects those RDM collections, and we were able to discuss future directions.

On the exhibition floor, which was huge, it was no surprise to see that GPUs for AI and Large Language Models (LLMs) were everywhere, from established entities such as NVIDIA, AMD and Intel, through to chipmakers who are taking on similar challenges with their own silicon, such as SambaNova who are providing LLM solutions for the likes of the US Department of Energy’s Lawrence Livermore National Lab.

Liquid cooling also featured heavily on the exhibition floor, with every kind of conceivable — and some novel —liquid cooling solution on display.

Several open-source software companies were also present, and it was nice to be able to talk directly with some of the staff from CIQ (who provide Rocky Linux, which we use as the base operating system for Bunya) as well as AlmaLinux and SUSE about their products and the trends they see in the management of HPC clusters.

The week flew by! It was such a rewarding experience and I’m much better prepared now to help build out UQ’s research computing and storage capability in the years ahead.