UQ is a step closer to participating in massive global data movement operations, thanks to a RDS supported project between RCC, the Queensland Brain Institute and the University of California San Diego.

The project team has successfully trialed migrating data between UQ and UCSD using UQ’s new data fabric MeDiCI (Metropolitan Data Storage Infrastructure).

The exciting news means in the near future, UQ researchers could be involved in international automatic data migration and real-time data experimentation and scientific workflows.

Utilising UCSD’s newly formed partnership with AARNET as part of the Pacific Research Platform initiative, the project set out to deliver transfers at 10Gbps (which was the maximum line rate available) between parallel file systems across the Pacific Ocean.

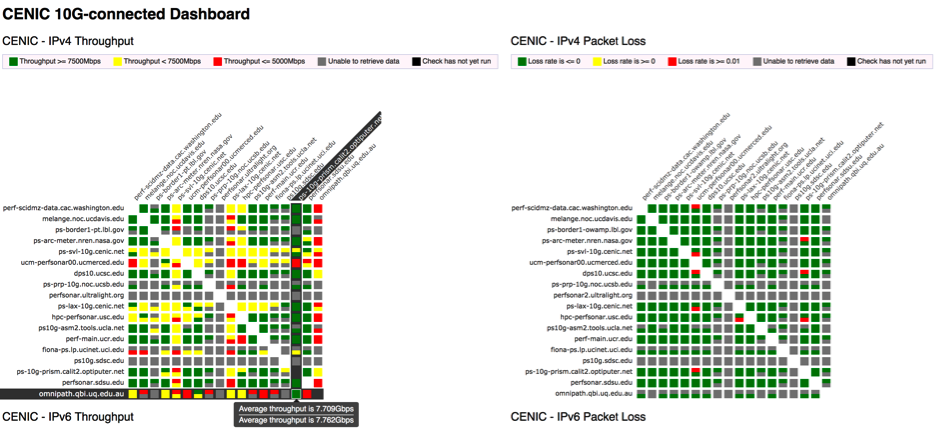

UQ and USCD are also integrating their work into the CENIC (Corporation for Educational Network Initiatives in California) monitoring platform. MaDDash, as it is known, is a project aimed at collecting and presenting monitoring data as a set of grids referred to as a dashboard.

UQ is now an active part of the CENIC and PRP network, having its long distance parallel filesystems initiative directly connected to this network. UQ now appears on the top-level monitoring dashboard of 10Gbps-connected systems for PerfSONAR testing endpoints. PerfSONAR is a widely-deployed test and measurement infrastructure that is used by science networks and facilities around the world to monitor and ensure network performance.

Jake Carroll, QBI ’s Senior IT Manager (Research), spent a week at UCSD on the project (read his trip report below for more information). QBI is an active and ongoing partner in the design, engineering, testing and development of MeDiCI. QBI enables RCC to test data movement between UQ and UCSD for applications in cardiac science and microscopy.

Jake Carroll (QBI), UCSD trip report

From 7–11 November 2016, I spent a week at the University of California San Diego (UCSD) campus, in and out of Calit2 (California Institute for Telecommunications and Information Technology) and the SDSC (San Diego Supercomputing Center).

Whilst here, I worked on a UQ RCC/Calit2 initiative that was effectively an international-scale experiment in filesystems. The scale and logistics behind this experiment were complicated, hard to coordinate and an exercise in ‘extreme’ project management as well as pushing the boundaries of what we know networking and filesystems technologies are capable of over long distances.

The project and experiment leverages previous work in UQ’s MeDiCI architecture utilising the full potential of the parallel filesystem known as Spectrum Scale (GPFS) and its AFM (Advanced File Management) data locality, caching and tiering capabilities.

The journey the technology took to get to a functional point was as much a virtual tour as it was a physical tour. The Queensland Brain Institute, as an active and on-going partner in the design, engineering and development of the RCC’s MeDiCI fabric provided test-hosts. These hosts started out life as experimental nodes provided by Dell/EMC Inc. connected to an all flash storage array (provided by IBM/SanDisk) in QBI’s data centre, which then traversed the UQ campus to optimise our path to the United States West Coast. Finally, with the assistance of a large group of people (listed below) the test hosts landed in the RCC/QCIF’s network rack at the Polaris Data Centre. It was here we achieved optimal network connectivity and line rate efficiency to our neighbours across the ocean.

The project involves a lot of cross-institutional and international collaboration.

From Australia:

- UQ RCC: David Abramson, Michael Mallon

- UQ QBI: Irek Porebski

- UQ ITS Networks team: Marc Blum, Alan Ewer and Kelsey Walton

- AARNET: Chris Myers.

From the United States:

- UCSD Calit2: John Graham, Tom Defanti, Philip Papadopoulos

- CENIC: John Hess.

Utilising a 100Gbps circuit in conjunction with Calit2’s newly formed partnership with AARNET as part of the PRP (Pacific Research Platform) initiative, we set out to deliver a true ‘line rate’ 10Gbps high bandwidth parallel filesystem all the way across the Pacific Ocean, from UQ to UCSD. We used building blocks we’d already created to do this including aspects of the MeDiCI architecture including AFM and parallel I/O movement with multiple data movers.

This all culminated in a 11,590km wide stretched parallel filesystem running at near line rate (10Gbps) using the IBM Spectrum Scale technology, leveraging the very best academic and research networking capabilities anywhere in the world. In the near future, this will allow UQ to participate in massive international data movement operations, automatic data migration and ‘being there’ style data experimentation and workflows that were simply not possible previously.

With newly found strong ties and excellent collaborations with our colleagues at UCSD and SDSC, we then moved onward to the task of integrating our new large bandwidth interactions to the CENIC (Corporation for Educational Network Initiatives in California) monitoring platform. MaDDash as it is known, is a project aimed at collecting and presenting two-dimensional monitoring data as a set of grids referred to as a dashboard. Many monitoring systems focus on one dimension, such as a single host or service. MaDDash offers a means to change that.

In traditional visualisation techniques for network flow data, users can quickly run into n-squared problems both in terms of configuration on the back-end and data presentation on the front-end if you try to present naturally two-dimensional data in this manner.

UQ is now an active part of the CENIC and PRP network, having our long distance parallel filesystems initiative ‘directly connected’ to this network and as such, we now appear on the top-level monitoring dashboard of 10Gbps connected systems for PerfSONAR testing endpoints, internationally. Using these hosts as a basis for the testing, UQ is now on the world (network) map!