Titanic’s sinking could have had a far less tragic outcome if a group of robots were on board that knew how to rescue people in the water.

Titanic’s sinking could have had a far less tragic outcome if a group of robots were on board that knew how to rescue people in the water.

Such thinking isn’t fantastical—these robots will likely be available in the future for any rescue mission, thanks to (machine) learning by trial and error.

A research team at UQ’s Centre for Advanced Imaging (CAI) is training the algorithms behind the artificial intelligence of such robots to think for themselves using the psychology of how humans and animals learn. And they’re using RCC high-performance computer Wiener to do so.

At CAI, Research Fellow Dr Maryam Ziaei, who has a PhD in Psychology and Neuroscience, and Adjunct Research Fellow Dr Reza Bonyadi, who has a PhD in Computer Science, are working on a long-term AI project titled: ‘Utilising psychological concepts to enhance reinforcement learning in artificial agents.’

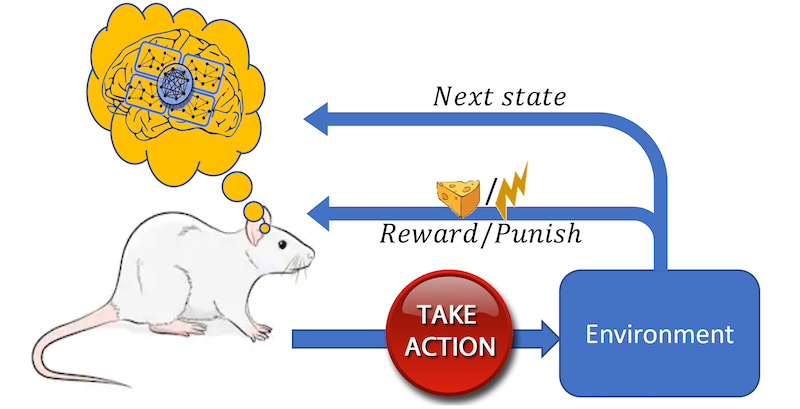

They are using psychological theories in animal and human learning to design an artificial agent, such as a robot, to effectively learn to accomplish tasks through trial and error.

As a starting point, Maryam is proposing to use the traditional concepts of ‘reward and punishment’ from the environments in which agents are learning.

“We have observed that an agent’s performance improves greatly when positive punishment, along with positive rewards, are being offered, suggesting the importance of integrating learning concepts into deep reinforcement learning areas,” said Reza.

“For a robot, for example, a positive punishment can be defined by an intrinsic self-punishment if the task was not completed successfully. While an electric shock can implement positive punishment for mice, the punishment for the robot could be simply implemented by reducing the value of a variable programmed to be kept at maximum in the robot’s mind.

“This is scalable to any environment as the robot punishes itself rather than the environment applying this punishment.

“Such agents interact with the environment and learn how to respond optimally to maximise their rewards, hence, are able to learn a wide range of tasks without any need to be pre-programmed,” said Reza.

“This type of learning algorithm is scalable to learn complex tasks for which the best strategies are unknown. The strategies learned by the agent would be more robust to environmental changes as the agent can modify its own strategies if necessary.”

Consider, for example, a bushfire rescue mission in which a robot is sent to rescue people in an unknown, possibly unstable, national park. The robot has no accurate information about the environment until it observes it. Hence, during the mission, the robot needs to make fast and robust decisions, otherwise the mission would be likely to fail.

“Such a decision-making style sounds more like an ‘art’ than a technique that can be formalised and encoded into the robot’s mind. This encourages the idea of robots that learn on their own by trying and failing rather than by examples,” said Reza.

“A project of this kind has a potential to resolve long-standing questions in computer science and can deepen our understanding of psychological concepts in humans.”

This is a new area of research in computer science, with only a few research groups worldwide (including Google’s Deep Mind as one of the leaders) that are leveraging psychological concepts in computer science dilemmas.

“This project is a great example of multi-disciplinary work, where we leverage psychological concepts to help an agent learn through consequences of its behaviour,” said Maryam, who is also an affiliated Research Fellow with UQ’s Queensland Brain Institute

Wiener’s role in this research

RCC’s high-performance computer Wiener, as a graphics processing unit (GPU) cluster, has helped make Maryam and Reza’s research possible.

Wiener is specifically for imaging-intensive research and artificial intelligence and machine learning research at UQ.

“Access to multiple GPUs at the same time was one of the most important benefits Wiener provided to us. It enabled training six agents in parallel [Wiener provides six GPUs per user in parallel] that saved a significant amount of time to run our experiments,” said Reza.

With Wiener, Reza used six GPU nodes, 30 GB of RAM and four core processing unit (CPU) cores per node, for four weeks.

Their parameter analyses involved training a three-deep reinforcement learning agent for 20 tasks under 14 parameter conditions, and their final analyses tested the agent on 20 tasks with these three agents with two parameter conditions—400 compute runs in total.

The tasks used in this study were simple computer games, such as Breakout, from an Atari 2600, a 1970s/1980s home video game console. The agents were asked to learn the games as fast as they could.

Each episode of each task required more than 600 steps to accomplish on average, meaning the weights of each deep reinforcement learning agent were tuned for more than 2.4 million steps.

RCC Chief Technology Officer Jake Carroll helped Maryam and Reza get to grips with Wiener. “Jake was very responsive, helpful and generous with his time. He assisted us to set up our Python environment and gave us invaluable advice to improve our computation capacity,” said Maryam.

Future of the research project and AI at CAI

The next steps of Maryam and Reza’s research project will involve testing impacts of other psychological and social concepts on the performance of artificial learning agents. For example, in a “society” of robots, what interactions between agents would lead to faster learning? What are the factors that facilitate learning from observations among agents situated in a group? What would drive better transfer learning (an agent that learns a task would be able to learn another task easier)?

“Transfer learning and how psychology defines it, social interactions, and even impacts of emotion on learning can be computerised and tested as a part of this project. This would shed light on many unknowns in the area of psychology and social sciences, while assisting the improvement of learning mechanisms in artificial agents,” said Maryam.

Overall, the project has the potential to achieve significant wins in the area of general-purpose AI.

Maryam and Reza have submitted an article to the Neural Information Processing Systems (NIPS) conference for review about their work and are currently working on another manuscript to expand their work with different environments and using more advanced psychological concepts.

They are also seeking funding for their work as it has huge potential for further development and is currently unfunded.

Meanwhile, CAI has been actively seeking ways to leverage AI in analyses of data, including images and signals.

So that UQ researchers don’t drown in a sea of big data, RCC will continue to look at how it can meet the increasing demand for data storage, technical data management and HPC power at UQ.

L–R: Dr Maryam Ziaei and Dr Reza Bonyadi.