By Jake Carroll

RCC Chief Technology Officer Jake Carroll presents his highlights and the IT industry trends from this year’s international supercomputing conference, SC19, held 17–22 November in Denver, Colorado, USA. Jake attended the conference alongside RCC Director Professor David Abramson.

IT Industry trends

Supercomputing is getting denser, more powerful and higher performance per square meter of space being consumed. However, it is all coming at a cost.

Cooling top-end high-performance computers (HPC) with air is becoming more difficult. Significant players in the industry (whether it be Intel, AMD, Nvidia or otherwise) are looking to the future and have their TDP (Thermal Design Power) set well beyond 350 watts per central processing unit (CPU).

This has substantial long-term implications for the way we design data centre cooling, the way we build HPC systems and the facilities required to house them.

Liquid cooling directly to the CPU/graphics processing units (GPU) is where the industry is moving. If readers are old enough, they'll know this isn't a new concept. Liquid cooling of computing equipment isn't novel. It is just becoming the requisite way to achieve density and performance once more.

Software stacks are becoming more cohesive and usable for alternative acceleration technologies. UQ has HPC Wiener, which is powered with GPU and field programmable gate array (FPGA), but other products, such as dedicated application-specific integrated circuits (ASIC), are on the rise, as are the frameworks that allow users to more easily interact with them. Examples of highly specific chips that outperform even GPU for graph-mathematics are Graphcore.

Intel is taking a holistic approach with its One API: It plans to have one layer of the programming interface, language and code to run on CPU, GPU, FPGA and ASIC. This is the big picture promise of One API.

Nvidia is partnering with parallel filesystems providers DDN and IBM (their respective filesystems are Lustre and Spectrum Scale) to bring what they call "Magnum IO" to the data storage industry. Magnum IO’s main benefit is it bypasses the CPU to enable direct input/output (IO) between GPU memory and network storage. This will accelerate IO across a broad range of workloads, from artificial intelligence to visualisation. In the benchmarks we've seen, the improvements in storage throughput and latency are considerable. We have all the infrastructure to be able to implement this technology at UQ, but the code to do so for the filesystems will not be available for a few months yet. RCC will likely move quickly on this once it is available and provide these benefits to our users.

The once unusual and esoteric InfiniBand is now widely accepted as the communication medium and interconnect of choice for data storage connectivity. There was once some tension in the industry about whether Ethernet would prevail and replace Infiniband in the supercomputing sector. It did not. Mellanox announced informally that is now working on its Next Data Rate (NDR) 400 gigabit (beginning at 400 gigabits per second per server port) InfiniBand generation, as the replacement for High Data Rate (HDR—200 gigabits per second per server port), to interconnect the next generation of supercomputing and machine learning platforms

HPC scheduler discussions have become more interesting again, with the Slurm scheduler roadmap for version 20 being discussed at the user group meeting. The Slurm Workload Manager (formerly known as Simple Linux Utility for Resource Management or SLURM) is a free, open-source job scheduler used in supercomputing. There have been a lot of changes in the way GPU tracking and resource allocations work—in fact, there’s been a major rewrite. UQ HPC Wiener will be upgraded to the latest OpenHPC version, featuring a lot of new Slurm capability, in early 2020.

The CPU market has diversified further with strong market competition coming from AMD’s Rome CPU. A year ago, the industry and its consumers were considering AMD as a potential competitor to Intel for the first time in many years. It is now confirmed and very much obvious that AMD are back in a competitive form. Benchmarks show impressive results across a wide number of commonly-used codes and applications in supercomputing for the AMD Rome platform.

Equally, ARM-based CPUs are becoming more realistic as the software stack and development environments (and with them, the more common HPC codes) mature. Of note, the ARM A64FX processor was on show at SC19, offering a unique architecture that appears more like a GPU than a traditional CPU.

As always, RCC is constantly considering all of these capabilities for future technologies, opportunities and infrastructure to support UQ’s research computing and delivery of scientific outcomes.

Invited talks

Invited talks this year at SC19 were some of the best I have seen. I took more time to engage with these than in previous years, as I find myself benefiting more from listening to others about their projects and journey as opposed to raw tech’ and engineering discussions and presentations.

RCC Director Prof. David Abramson was actually the vice-chair this year of the invited speakers committee and invested a lot of time into the selection of the talks—and it showed.

The quality, the science and the presentations were all absolutely world-class. Perhaps more importantly, they were captivating, entertaining and easily approachable.

What I did

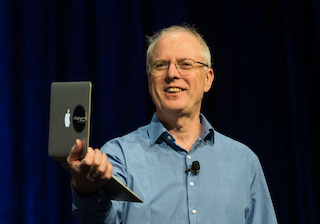

This year at the annual supercomputing conference was actually more 'relaxed' for me. I spoke at the IBM Spectrum Scale user group meeting, attended by more than 130 delegates from all over the globe. These delegates were consumers, experts, engineers and designers of IBM's Spectrum Scale filesystem, the same filesystem that powers UQ’s MeDiCI data storage fabric.

I spoke about our use and experience of Spectrum Scale at a technical and managerial level and demonstrated MeDiCI to the delegates. I then detailed how UQ’s campus instrument data generation and scientific data capture was being facilitated by the data fabric, touching upon our future plans and ideas.

I ended the presentation with a big picture overview of RCC’s CAMERA framework. CAMERA supports a complete life cycle for instrument-gathered data, seamlessly rendering it on a range of instruments, cloud computers, desktops and HPCs. I outlined what this means for repositories, data management and our ability to provide users a consistent data namespace, providing both managed and unmanaged data storage options to our users and to the campus.